Google presents two complementary techniques to significantly improve language models without massive extra compute:

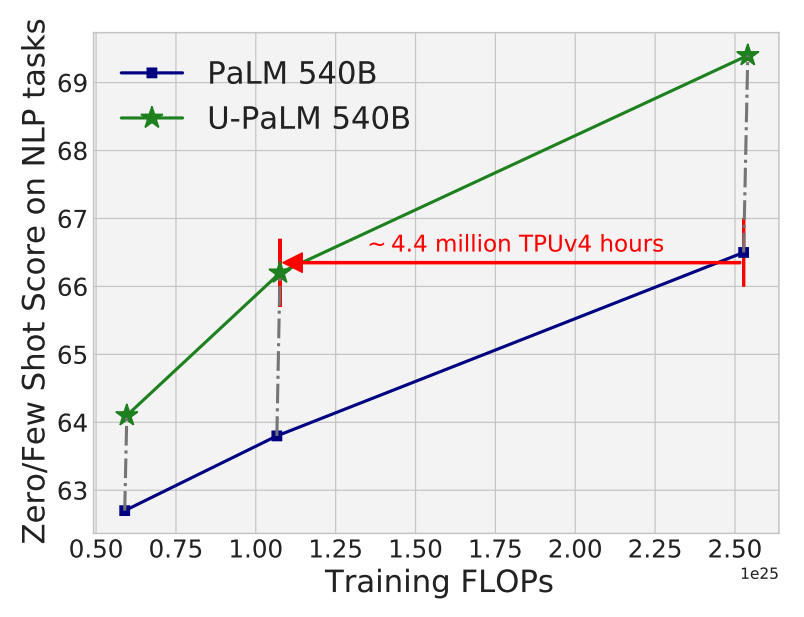

UL2R (UL2 Repair): additional stage of continued pre-training with the UL2 (Unified Language Learner) objective (paper) for training language models as denoising tasks, where the model has to recover missing sub-sequences of a given input. Applying it to PaLM results in new language model U-PaLM.

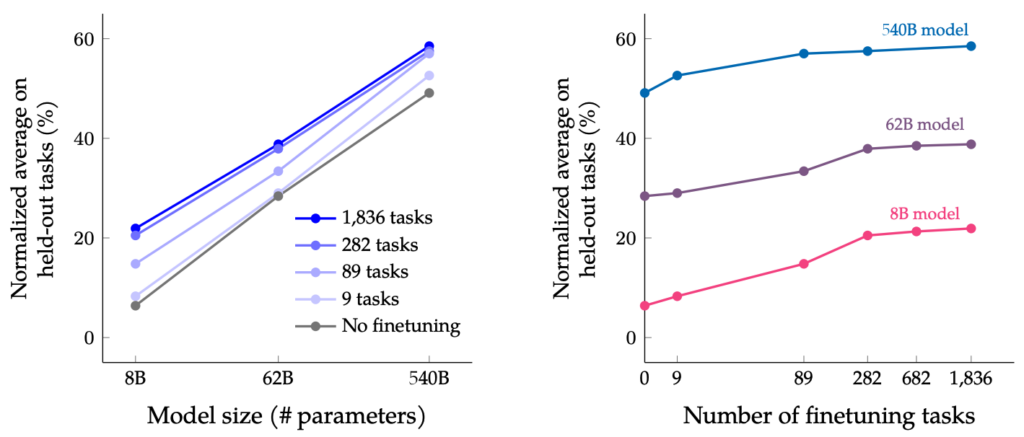

Flan (Fine-tuning language model): instruction fine-tuning on a collection of NLP datasets. Applying it to PaLM results in language model Flan-PaLM.

Combination of two approaches applied to PaLM results in Flan-U-PaLM.

Leave a Reply