On Dec 6, 2023, Google launched its new multimodal model Gemini that will work across Google products like search, ads, and the chatbot Bard. Gemini was trained jointly across text, image, audio, and video and has a 32K context window.

Gemini 1.0 comes in three different sizes:

- Gemini Ultra: largest and most capable model to be released at the beginning of 2024

- Gemini Pro: best model that is available immediately within Bard in 170 countries (but not yet in the EU and UK)

- Gemini Nano: most efficient model for mobile devices with the same availability as Pro; Nano-1 (1. 8B parameters), Nano-2 (3.25B parameters)

Achievements and capabilities:

- Gemini can recognize images, and speak in real-time (unfortunately, the demo was fake)

- As the first AI model, it outperforms humans on the exam benchmark MMLU.

- It has advanced math and coding capabilities

- Gemini Ultra beats GPT-4 in 30 out of 32 commonly used benchmarks:

- Gemini Pro beats GPT-3.5 in 6 out of 8 benchmarks:

Some more sources: Google release note, Gemini report, Google Developer blog, YouTube: Matt Wolfe, AI Explained.

Interestingly, Gemini was trained on a large fleet of TPUv4 accelerators across multiple data centers. At such scales, machine failures due to cosmic rays are commonplace and have to be handled (Gemini report, page 4).

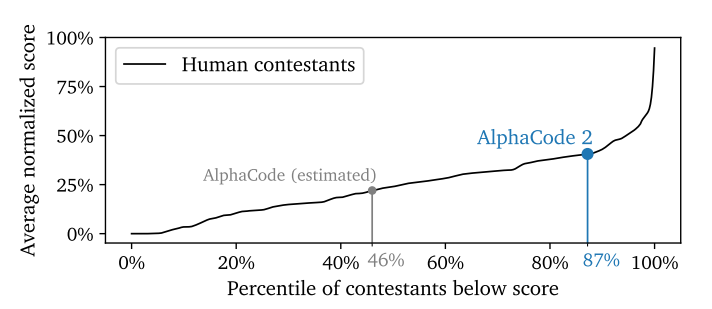

When paired with search and tool-use techniques, Gemini forms the basis for advanced reasoning systems like AlphaCode 2, which excels in competitive programming challenges against human competitors. AlphaCode 2, solely based on Gemini Pro and not yet on Gemini Ultra, shows a substantial improvement over its predecessor by solving 43% of problems on Codeforces, a 1.7x increase. In this way, AlphaCode 2 performs better than 87% of human competitors. However, due to its intensive machine requirements to generate, filter, and score up to a million solutions, AlphaCode 2 is currently not feasible for customer use, although Google is working on this.