In an announcement from Feb 22, 2023, and in a corresponding Nature paper, Google demonstrates for the first time that logical qubits can actually reduce the error rates in a quantum computer.

Physical qubits have a 1-to-1 relation between a qubit in a quantum algorithm and its physical realization in a quantum system. The problem with physical qubits is that due to thermal noise, they can decohere so they no longer build such a quantum system with a superposition of the bit states 0 and 1. How often this decoherence happens is formalized by the quantum error rate. This error rate influences a quantum algorithm in two ways. First, the more qubits are involved in a quantum algorithm, the higher the probability of an error. Second, the longer a qubit is used in a quantum algorithm and the more gates act on it, i.e. the deeper the algorithm is, also the higher the probability of an error.

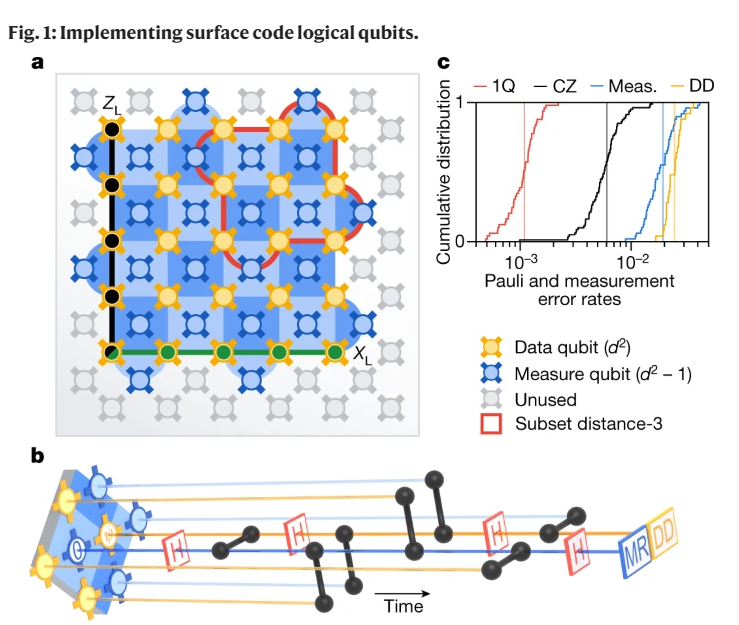

It is surprising that it is possible to correct (via quantum error correction algorithms) physical qubit errors without actually measuring the qubits (which would always destroy them). Such error correction codes are at least already known since 1996. The information of a physical qubit that is distributed over a bunch of physical qubits in a way so that certain quantum errors are automatically corrected, builds a logical qubit. However, the physical qubits involved in the logical qubit are also subjected to the quantum error rate. Thus there is an obvious trade-off between involving more physical qubits for a longer time, which could increase the error rate, and having a mechanism to reduce the error rate. Which effect prevails depends on the used error correction code as well as on the error rate of the used physical qubits. Google has now demonstrated for the first time that in their system there is actually an advantage of using a so-called surface code logical qubit.

Leave a Reply