Google Research published an impressive language model that can turn a text description into high-quality music [webpage, paper]. The source code is unfortunately not publicly available.

GNSS & Machine Learning Engineer

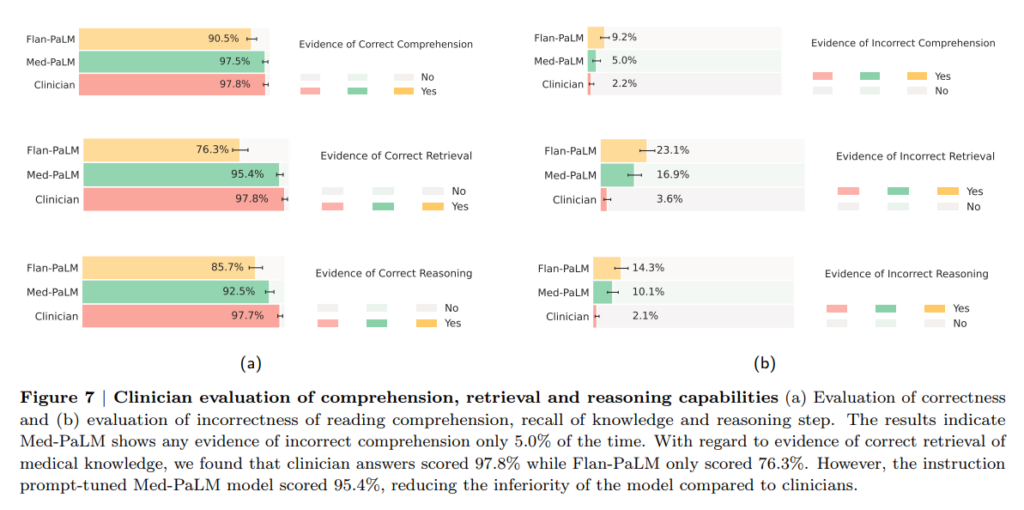

In a recent paper from Dec 26, 2022, Google demonstrates that its large language model Med-PaLM, based on 540 billion parameters with a special instruction prompt tuning for the medical domain, reaches almost clinician’s performance on new medical benchmarks MultiMedQA (benchmark combining six existing open question answering datasets spanning professional medical exams, research, and consumer queries) and HealthSearchQA (a new free-response dataset of medical questions searched online). The evaluation of the answers considering factuality, precision, possible harm, and bias was done by human experts.

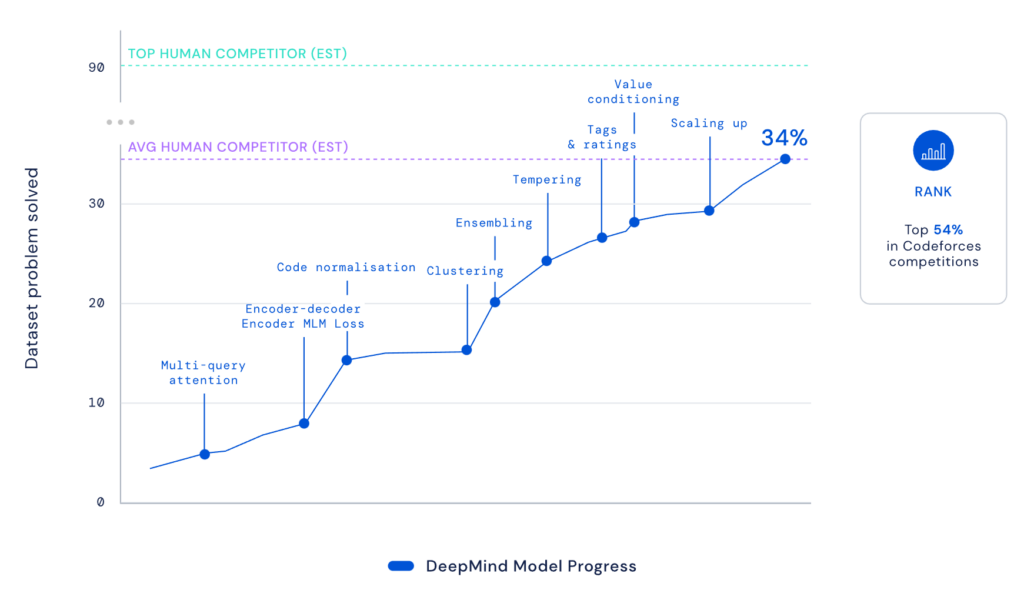

DeepMind publishes that AlphaCode reaches median human competitor performance in real-world programming competitions on Codeforces by now scaling up the number of possible solutions to the problem to millions instead of tens like before.

This announcement was originally made on 02 Feb 2022 and was now published on 08 Dec 2022 in Science.

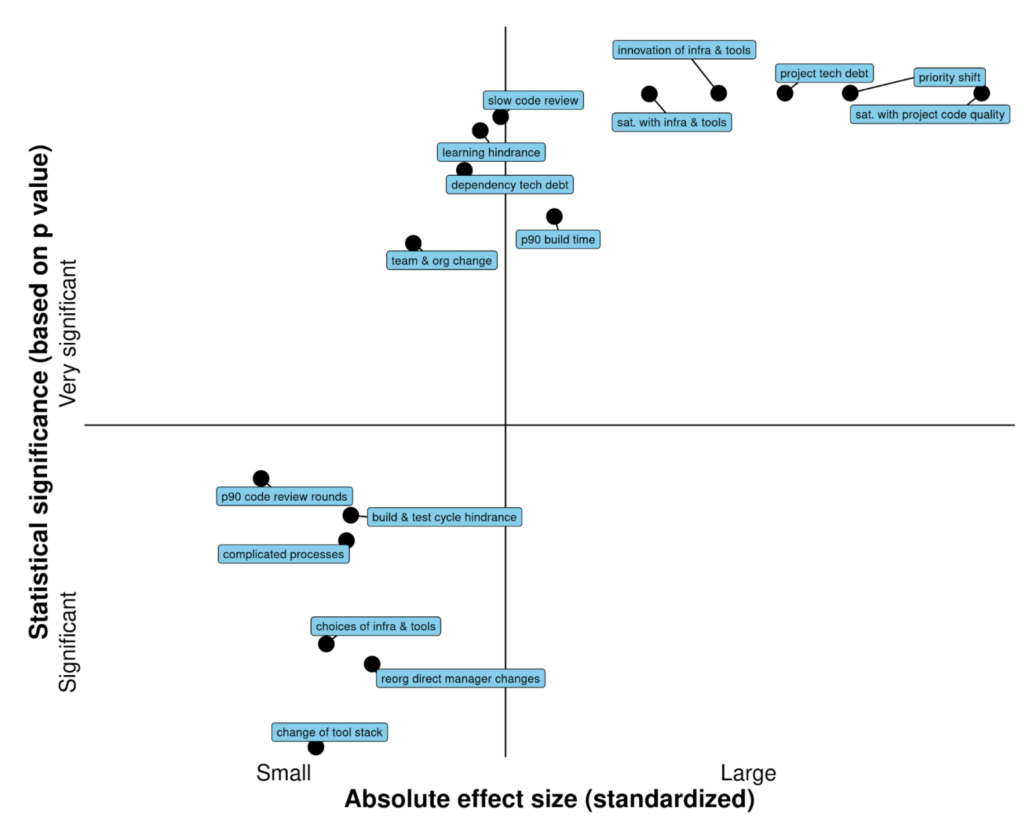

A deep analysis within Google demonstrated that satisfaction with project code quality has the most significant effect on developer productivity.

Asking ChatGPT what code quality means, we get:

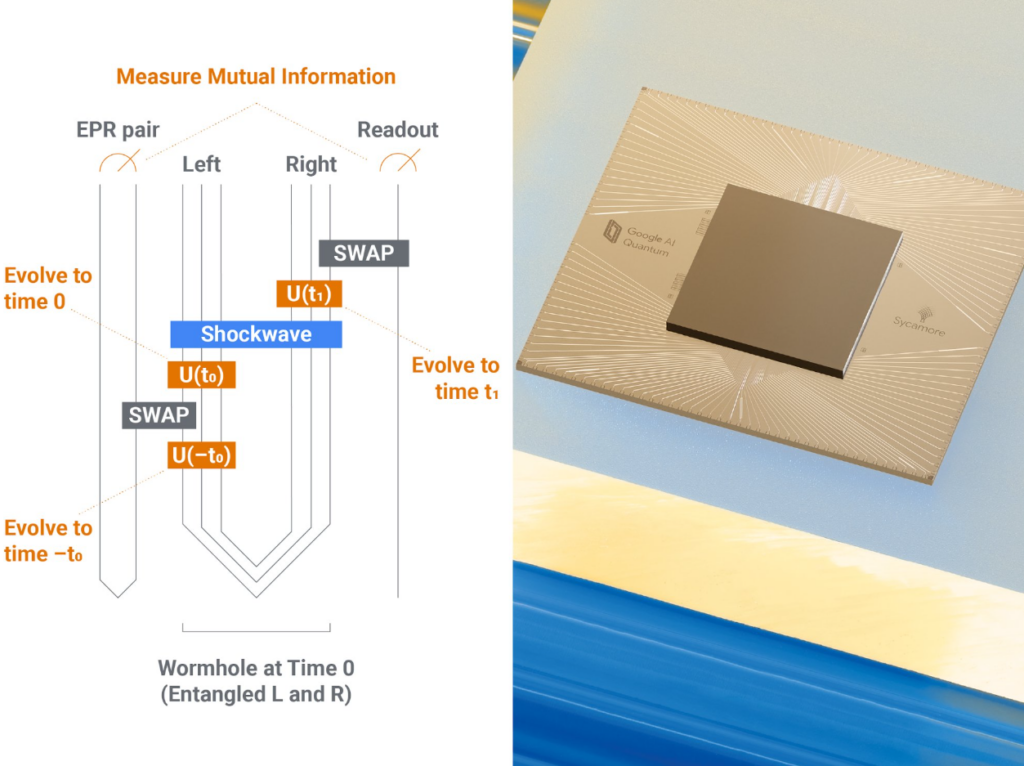

Researchers from Google AI, Caltech, Harvard, MIT, and Fermilab simulated a quantum theory on the Google Sycamore quantum processor to probe the dynamics of a quantum system equivalent to a wormhole in a gravity model.

The quantum experiment is based on the ER=EPR conjecture that states that wormholes are equivalent to quantum entanglement. ER stands for Einstein and Rosen who proposed the concept of wormholes (a term coined by Wheeler and Misner in a 1957 paper) in 1935, EPR stands for Einstein, Podolsky, and Rosen who proposed the concept of entanglement in May 1935, one month before the ER paper (see historical context). These concepts were completely unrelated until Susskind and Maldacena conjectured in 2013 that any pair of entangled quantum systems are connected by an Einstein-Rosen bridge (= non-traversable wormhole). In 2017 Jafferis, Gao, and Wall extended the ER=EPR idea to traversable wormholes. They showed that a traversable wormhole is equivalent to quantum teleportation [1][2].

The endeavor was published on Nov 30, 2022 in a Nature article. There is also a nice video on youtube explaining the experiment. Tim Andersen discusses in an interesting article whether or not a wormhole was created in the lab.

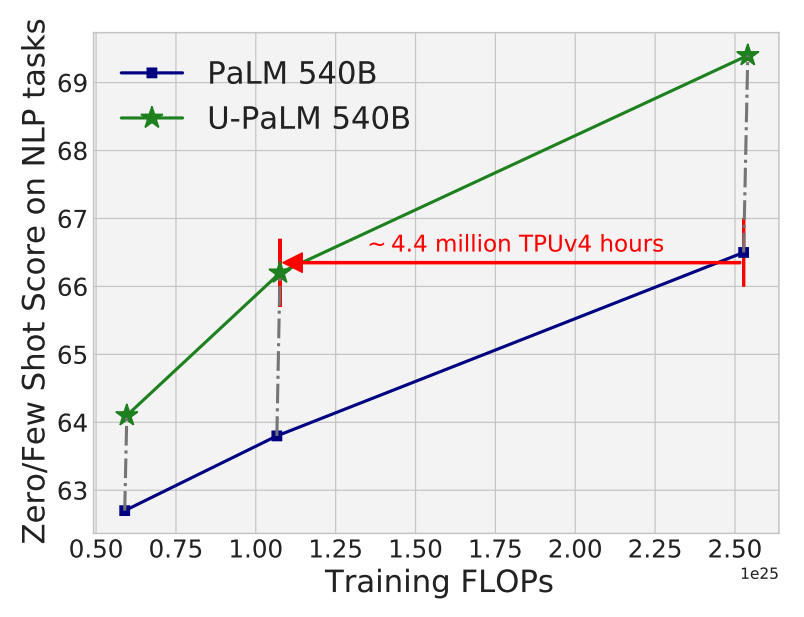

Google presents two complementary techniques to significantly improve language models without massive extra compute:

UL2R (UL2 Repair): additional stage of continued pre-training with the UL2 (Unified Language Learner) objective (paper) for training language models as denoising tasks, where the model has to recover missing sub-sequences of a given input. Applying it to PaLM results in new language model U-PaLM.

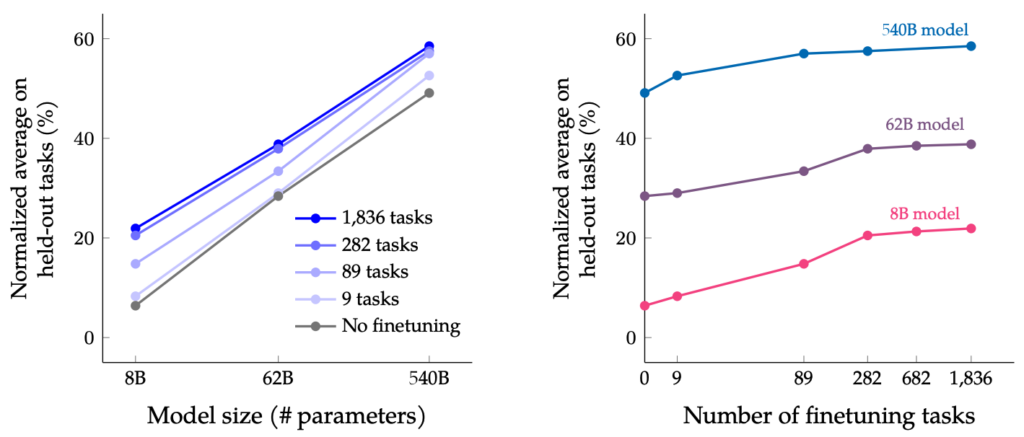

Flan (Fine-tuning language model): instruction fine-tuning on a collection of NLP datasets. Applying it to PaLM results in language model Flan-PaLM.

Combination of two approaches applied to PaLM results in Flan-U-PaLM.

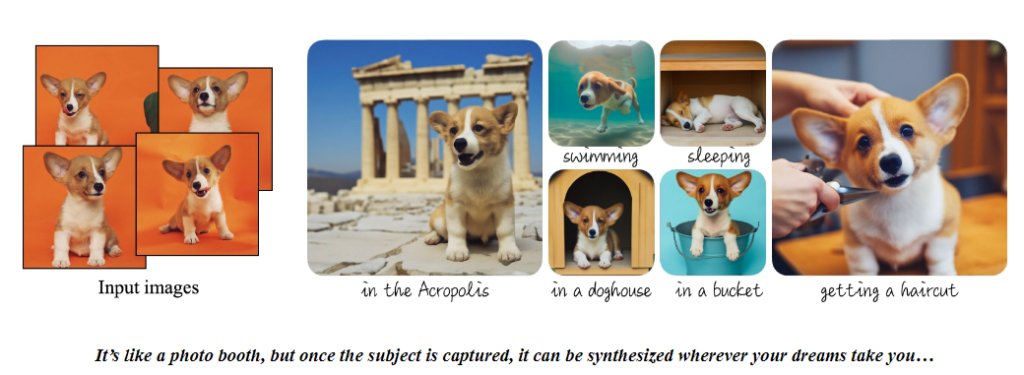

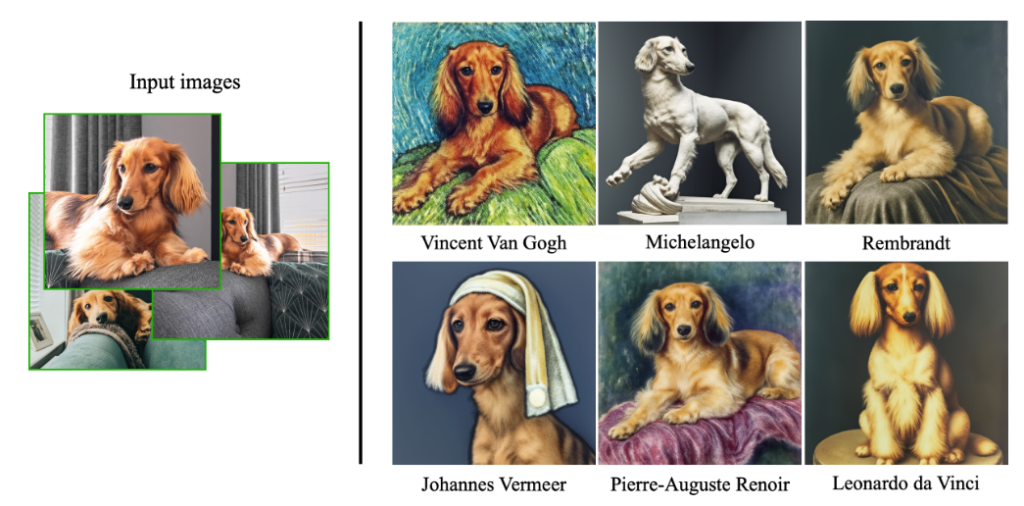

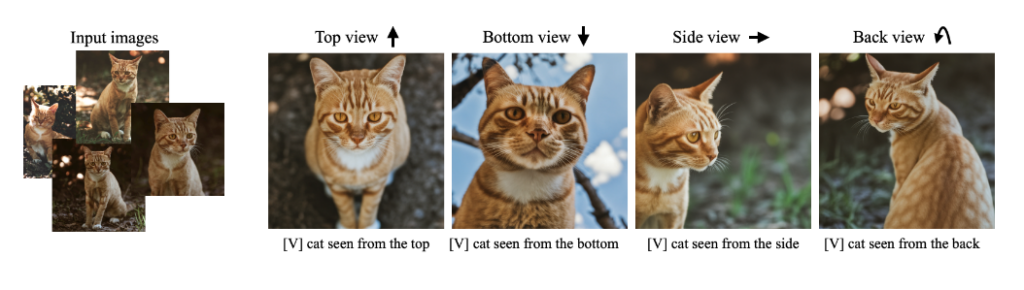

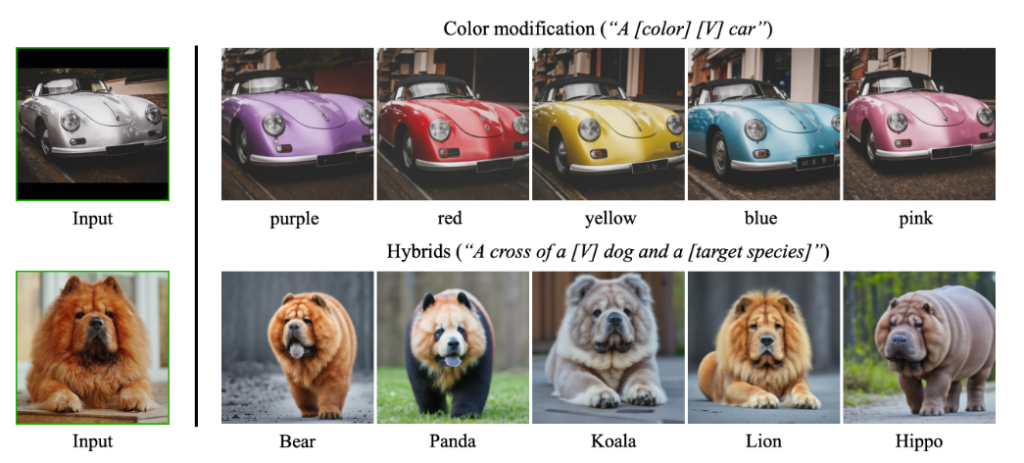

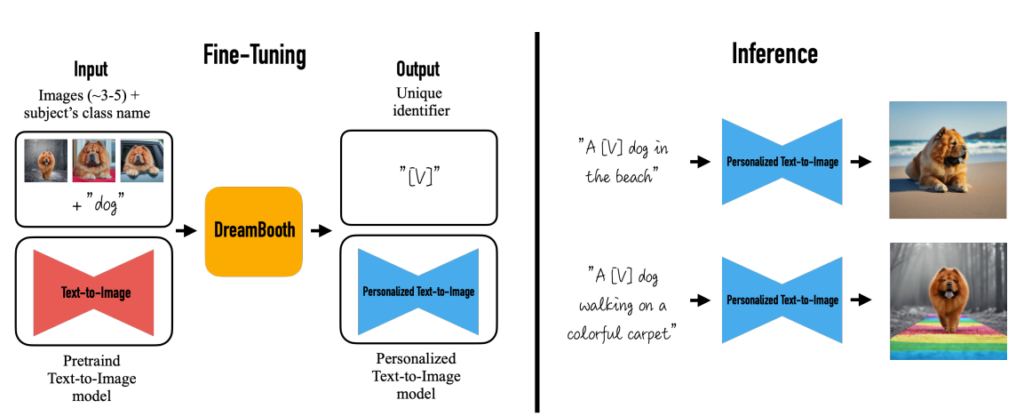

Google presents DreamBooth, a technique to synthesize a subject (defined by 3-5 images) in new contexts defined by text input.

The method is based on Google’s pre-trained text-to-image model Imagen which is not publicly available. However, source code based on Stable Diffusion already exists on GitHub.

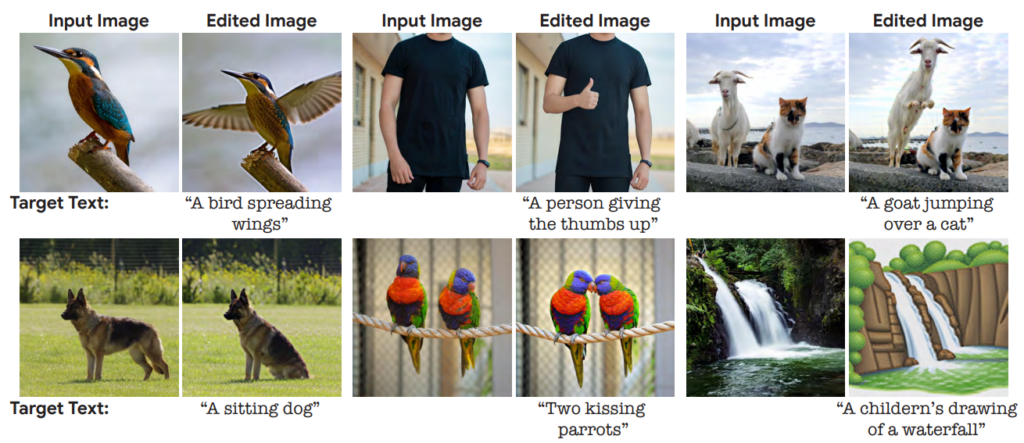

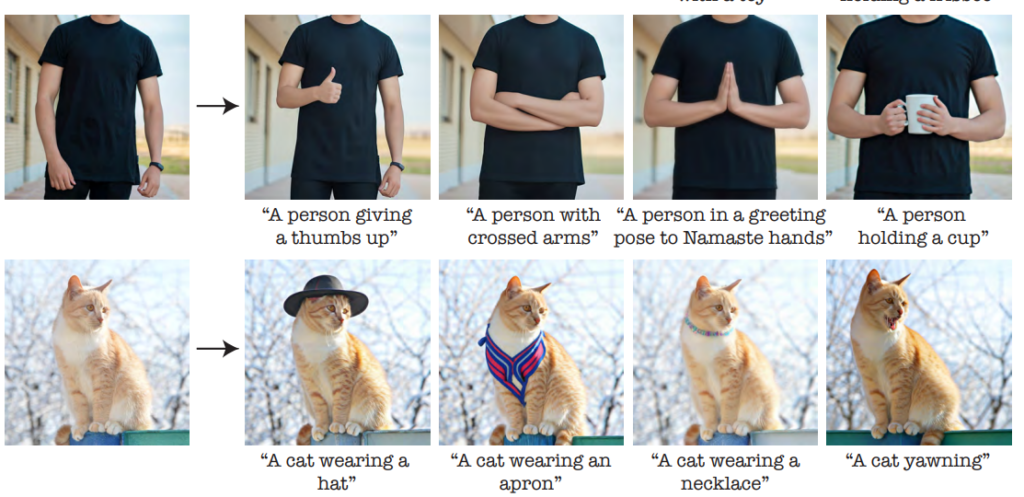

Google researchers present Imagic, a method to edit images by text input.

The method is based on Google’s pre-trained image generator Imagen which is not publicly available. However, source code based on Stable Diffusion already exists on GitHub.

A recent publication by University of California, Berkeley [InstructPix2Pix] goes into a similar direction and shows even more impressive results.

DreamFusion: Text-to-3D model using 2D diffusion via Google’s Imagen model.

Since Imagen is not publicly available, Stable Diffusion can be used instead to generate your own 3D models with Stable-Dreamfusion as described here.

NVIDIA has meanwhile presented with Magic3D a high-resolution text-to-3D content generation model with much higher quality. The paper was released on Nov 18, 2022, on arXiv.

Google’s Text-To-Video generation tool Imagen-Video looks even more impressive than Meta’s Make-A-Video.

© 2025 Stephan Seeger

Theme by Anders Noren — Up ↑