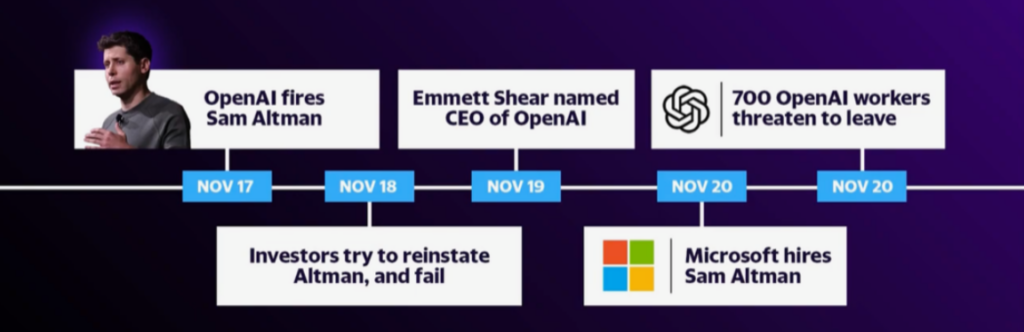

Nov 17, 2023:

Sam Altman was fired from OpenAI. Greg Brockman was first removed as chairman of the board, and later he announced to quit. Chief technology officer Mira Murati was appointed interim CEO.

From OpenAI’s announcement: “Mr. Altman’s departure follows a deliberative review process by the board, which concluded that he was not consistently candid in his communications with the board, hindering its ability to exercise its responsibilities. The board no longer has confidence in his ability to continue leading OpenAI. “

Some early discussions on Youtube: SVIC Podcast, Matt Wolfe.

Early details of what happened: Wes Roth.

No details are known as to why Sam Altman was fired, only speculations.

Nov 18, 2023:

More senior departures from OpenAI.

Some summaries of what is known a day later: [Matt Wolfe][AI Explained][MattVidPro AI][Yannic Kilcher].

Reports that the OpenAI board is in discussions with Sam Altman to return as CEO.

Still, no details are known as to why Sam Altman was fired, just more speculations.

Nov 19, 2023:

Emmett Shear (Twitch co-founder and former Twitch CEO) becomes OpenAI’s interim CEO (his tweet).

Still, no details are known as to why Sam Altman was fired, just more speculations.

Nov 20, 2023:

Announcement that Sam Altman and Greg Brockman go to Microsoft and lead a new Microsoft subsidiary.

Ilya Sutskever declared his regret for his participation in the board’s actions.

Employee Letter to OpenAI’s board signed by more than 700 of the 770 employees (including Ilya Sutskever and one-day CEO Mira Murati) to request the resignation of the whole board and reappointment of Sam Altman as CEO, otherwise they will resign and join the newly announced Microsoft subsidiary.

OpenAI’s board approached Anthropic about to merge [1].

Summaries of the latest news: [Matt Wolfe][AI Explained][Bloomberg].

Still, no details are known as to why Sam Altman was fired, just more speculations.

Nov 21, 2023:

Summary of the latest status: [Wes Roth].

Instead of taking OpenAI’s merger offer, Anthropic announced a massive update with Claude 2.1 and 200K context window.

Nov 22, 2023:

Sam Altman is back at OpenAI.

Summaries of the whole soap opera: [Matt Wolfe][AI Explained].

Still, no details are known as to why Sam Altman was fired, just more speculations.

Nov 23, 2023:

New rumors about Sam Altman’s ouster: Mira Murati told employees on Wednesday that a letter about the AI breakthrough called Q*, precipitated the board’s actions.

Background of why Sam Altman may have been fired:

There is much speculation about safety concerning people (like Ilya Sutskever) acting against people trying to accelerate AI commercialization (Sam Altman, Greg Brockmann). As more and more money is poured in, there may be a concern about losing control over OpenAI’s mission to achieve AGI for the benefit of all of humanity. Interesting in this context is a statement [1][2] by Sam Altman at the APEC summit in San Francisco on November 16, 2023 (where US President Biden met Chinese President Xi Jinping) that OpenAI recently made a major breakthrough. In addition, he made a statement [1][2] about the model’s capability within the next year. Does this mean that AGI was achieved within OpenAI? This is important in the context of OpenAI’s structure as a partnership between the original nonprofit and a capped profit arm. The important parts of the document describing the structure are:

- First, the for-profit subsidiary is fully controlled by the OpenAI Nonprofit…

- Second, … The Nonprofit’s principal beneficiary is humanity, not OpenAI investors.

- Fourth, profit allocated to investors and employees, including Microsoft, is capped. All residual value created above and beyond the cap will be returned to the Nonprofit for the benefit of humanity.

- Fifth, the board determines when we’ve attained AGI. Again, by AGI we mean a highly autonomous system that outperforms humans at most economically valuable work. Such a system is excluded from IP licenses and other commercial terms with Microsoft, which only apply to pre-AGI technology.

This means that once AGI is achieved (and the board decides when this is the case) investors can no longer benefit from further advancements. Their investment is basically lost.

Another speculation is that the OpenAI’s board member Adam D’Angelo is behind Sam Altman’s ouster. Adam D’Angelo is co-founder and CEO of Quora, which built the chat platform Poe whose recently introduced assistant feature on October 25 is in direct competition with OpenAI’s custom GPTs made public on OpenAI’s DevDay on November 06, 2023. However, this reason becomes less likely as Adam is also part of the new board after rehiring Sam.