The field of artificial intelligence raises numerous questions, frequently discussed but often left without a clear consensus. We’ve chosen to contribute our unique insights on some of these recurring topics, aiming to shed light on them from our perspective. In formulating this text, we’ve utilized GPT-4 to assist with language generation, but the insights and conclusions drawn are entirely our own.

The questions we address are:

- Can Machines Develop Consciousness?

- Should Humans Verify Statements from Large Language Models (LLMs)?

- Can Large Language Models (LLMs) Generate New Knowledge?

From the philosophical implications to practical applications, these topics encompass the broad scope of AI’s capabilities and potential.

Can Machines Develop Consciousness? A Subjective Approach

The question of whether machines can develop consciousness has sparked much debate and speculation. A fruitful approach might be to focus not solely on the machines themselves, but also on our subjective interpretations and their influence on our understanding of consciousness.

Consciousness might not be directly definable, but its implications could be essential for our predictive abilities. When we assign consciousness to an entity – including ourselves – we could potentially enhance our ability to anticipate and understand its behavior.

Associated attributes often assigned to consciousness include self-reflection, self-perception, emotional experience, and notably, the capacity to experience pain, as highlighted by historian Yuval Noah Harari. However, recognizing these attributes in an object is a subjective process. It is not inherently possessed by the object but is a projection from us, the observers, based on our interpretation of the object’s behaviors and characteristics.

This suggests that a machine could be considered “conscious” if assigning such traits improves our understanding and prediction of its behavior. Interestingly, this notion of consciousness assignment aligns with a utilitarian perspective, prioritizing practicality and usefulness over abstract definitions.

Reflecting on consciousness might not always be a conscious and rationalized process. Often, our feelings and intuition guide us in understanding and interpreting the behaviors of others, including machines. Therefore, our subconscious might play a crucial role in determining whether we assign consciousness to machines. In this light, it might make sense to take a democratic approach in which individuals report their feelings or intuitions about a machine, collectively contributing to the decision of whether to assign it consciousness.

Furthermore, reflexivity, commonly associated with consciousness, could potentially be replicated in machines through a form of “metacognitive” program. This program would analyze and interpret the output of a machine learning model, mirroring aspects of self-reflection (as in SelFee). Yet, whether we choose to perceive this program as part of the same entity as the model or as a separate entity may again depend on our subjective judgment.

In conclusion, the concept of consciousness emerges more from our personal perspectives and interpretations than from any inherent qualities of the machines themselves. Therefore, determining whether a machine is ‘conscious’ or not may be best decided by this proposed democratic process. The crucial consideration, which underscores the utilitarian nature of this discussion, is that attributing consciousness to machines could increase our own predictive abilities, making our interactions with them more intuitive and efficient. Thus, the original question ‘Can machines develop consciousness?’ could be more usefully reframed as ‘Does it enhance our predictability, or feel intuitively right, to assign consciousness to machines?’ This shift in questioning underscores the fundamentally subjective and pragmatic nature of this discussion, engaging both our cognitive processes and emotional intuition.

Should Humans Verify Statements from Large Language Models (LLMs)? A Case for Autonomous Verification

In the realm of artificial intelligence (AI), there is ongoing discourse regarding the necessity for human verification of outputs generated by large language models (LLMs) due to their occasional “hallucinations”, or generation of factually incorrect statements. However, this conversation may need to pivot, taking into account advanced and automated verification mechanisms.

LLMs operate by predicting the most probable text completion based on the provided context. While they’re often accurate, there are specific circumstances where they generate “hallucinations”. This typically occurs when the LLM is dealing with a context where learned facts are absent or irrelevant, leaving the model to generate a text completion that appears factually correct (given its formal structure), but is indeed a fabricated statement. This divergence from factuality suggests a need for verification, but it doesn’t inherently demand human intervention.

Rather than leaning on human resources to verify LLM-generated statements, a separate verification program could be employed for this task. This program could cross-check the statements against a repository of factual information—akin to a human performing a Google search—and flag or correct inaccuracies.

This brings us to the conception of the LLM and the verification program as a single entity—a composite AI system. This approach could help create a more reliable AI system, one that is capable of autonomously verifying its own statements (as in Self-Consistency, see also Exploring MIT Mathematics where GPT-4 demonstrates 100% performance with special prompting, but see also critique to this statement).

It is vital to recognize that the lack of such a verification feature in current versions of LLMs, such as GPT-3 or GPT-4, doesn’t denote its unfeasibility in future iterations or supplementary AI systems. Technological advancements in AI research and development might indeed foster such enhancements.

In essence, discussions about present limitations shouldn’t eclipse potential future advancements. The question should transition from “Do humans need to verify LLM statements?” to “How can AI systems be refined to effectively shoulder the responsibility of verifying their own outputs?”

Can Large Language Models (LLMs) Generate New Knowledge?

There’s a frequent argument that large language models (LLMs) merely repackage existing knowledge and are incapable of generating anything new. However, this perspective may betray a misunderstanding of both the operation of LLMs and the process of human knowledge generation.

LLMs excel at completing arbitrary context. Almost invariably, this context is novel, especially when provided by a human interlocutor. Hence, the generated completion is also novel. Within a conversation, this capacity can incidentally set up a context that, with a high probability, generates output that we might label as a brilliant, entirely new idea. It’s this exploration in the vast space of potential word combinations that allows for the random emergence of novel ideas—much like how human creativity works. The likelihood of generating a groundbreaking new idea increases if the context window already contains intriguing information. For instance, a scientist contemplating an interesting problem.

It’s important to note that such a dialogue doesn’t necessarily need to involve a human. One instance of the LLM can “converse” with another. If we interpret these two instances as parts of a whole, the resulting AI can systematically trawl through the space of word combinations, potentially generating new, interesting ideas. Parallelizing this process millions of times over should increase the probability of discovering an exciting idea.

But how do we determine whether a word completion contains a new idea? This assessment could be assigned to yet another instance of the LLM. More effective, perhaps, would be to have the word completion evaluated not by one, but by thousands of LLM instances, in a sort of AI-based peer review process.

Let’s clarify what we mean by different instances of an LLM. Different instances of the LLM can mean just a different role in the conversation, e.g. bot1 and bot2. In this way a single call to the LLM could just go on with the conversation, switching between bot1 and bot2 as appropriate, until the token limit is achieved. Then the next call to the LLM is triggered with a summary of the previous conversation so that there is again some room for further discussion between the bots in the limited context window.

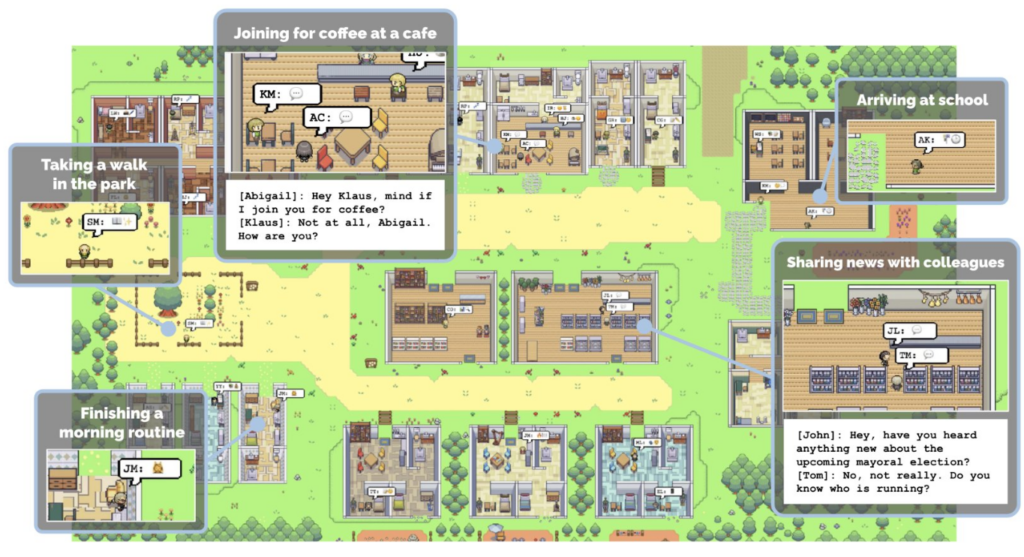

To better simulate the discussion between two humans or a human and a bot, two instances of the LLM could also mean the simulation of two agents each having its own memory. This memory has always to be pasted into the context window together with the previous ongoing conversation in a way so that there is still room for the text completion by the LLM in the limited context window. Each agent will generate its own summary of the previous conversation, based on its own memory and the recent conversation. The summary is then always added to the memory. In this way, also each reviewer LLM instance mentioned above has in its context window a unique memory, the last part of the conversation and the task to assess the last output in the discussion. The unique memory of each agent will give each agent a unique perspective on the conversation.

This, in effect, reveals a potential new avenue for idea generation, knowledge expansion, and innovation, one that leverages the predictive capabilities of AI.