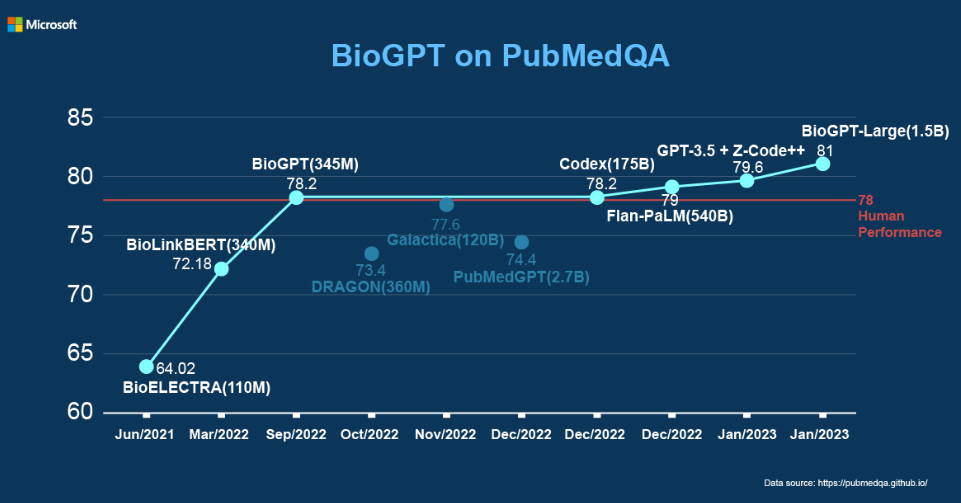

Microsoft Research presented with BioGPT a domain-specific generative model that was trained on biomedical literature and achieved best-in-class performance above the human level [paper, article].

GNSS & Machine Learning Engineer

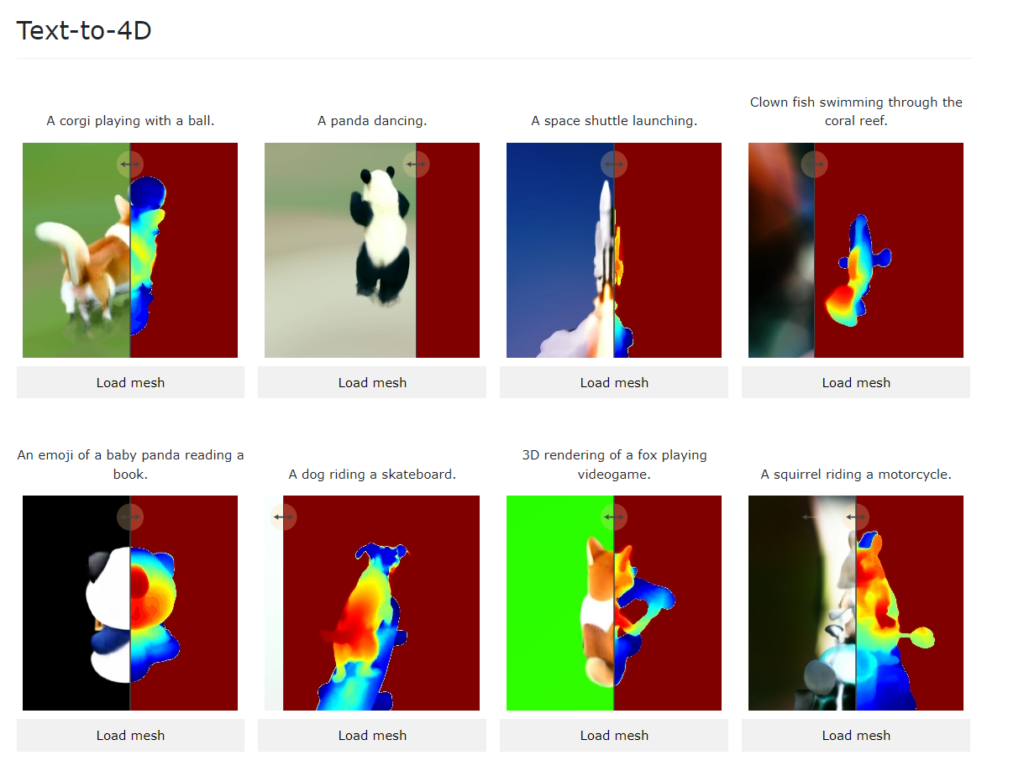

Meta presented with MAV3D (Make-A-Video-3D) a method for generating 4D content, i.e. a 3D video, from a text description by using a 4D dynamic Neural Radiance Field (NeRF) [project page, paper]. Unfortunately, the source code has not been released.

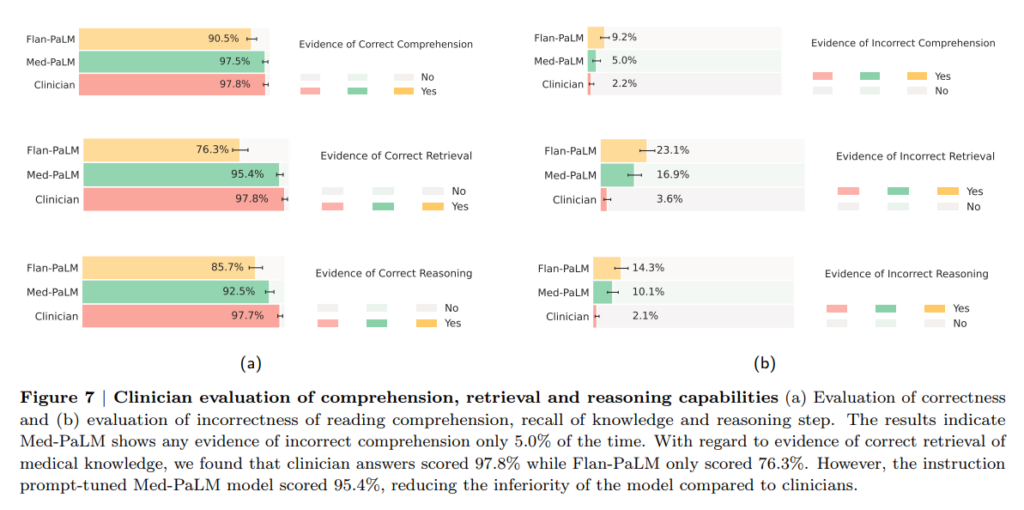

In a recent paper from Dec 26, 2022, Google demonstrates that its large language model Med-PaLM, based on 540 billion parameters with a special instruction prompt tuning for the medical domain, reaches almost clinician’s performance on new medical benchmarks MultiMedQA (benchmark combining six existing open question answering datasets spanning professional medical exams, research, and consumer queries) and HealthSearchQA (a new free-response dataset of medical questions searched online). The evaluation of the answers considering factuality, precision, possible harm, and bias was done by human experts.

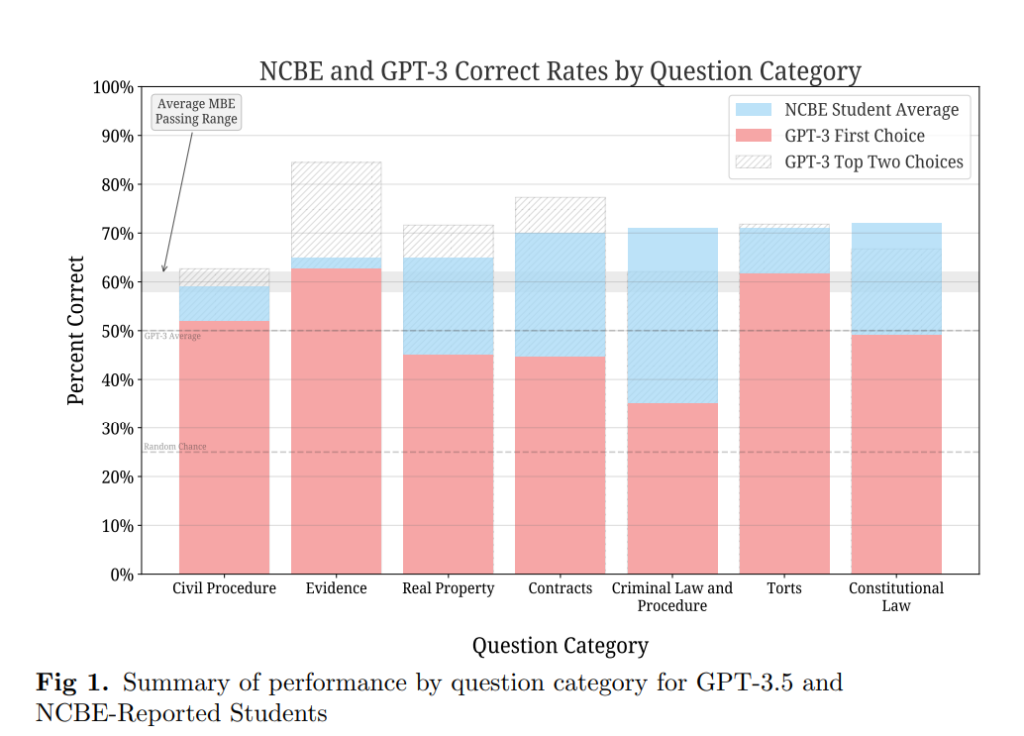

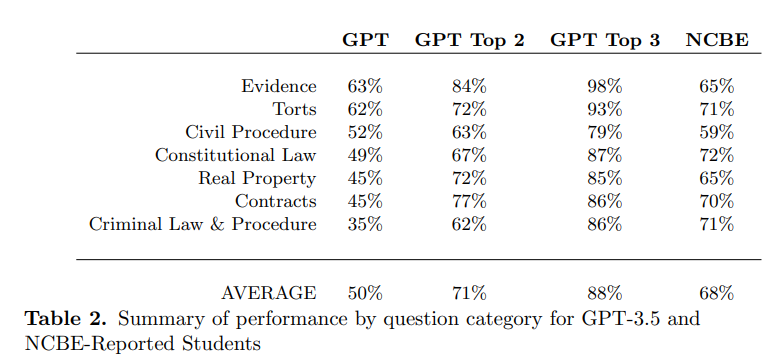

In the United States, most jurisdictions require applicants to pass the Bar Exam in order to practice law. This exam typically requires several years of education and preparation (seven years of post-secondary education, including three years at an accredited law school).

In a publication from Dec 29, 2022, the authors evaluated the performance of GPT-3.5 on the multiple choice part of the exam. While GPT is not yet passing that part of the exam, it significantly exceeded the baseline random chance rate

of 25% and reached the average human passing rate for the categories Evidence and Torts.

On average, GPT is performing about 17% worse than human test-takers across all categories.

Similar to this publication is the report that ChatGPT was able to pass the Wharton Master of Business Applications (MBA) exam.

On March 15, 2023, a paper was published that stated that GPT-4 significantly outperforms both human test-takers and prior models, demonstrating a 26% increase over GPT-3.5 and beating humans in five of seven subject areas.

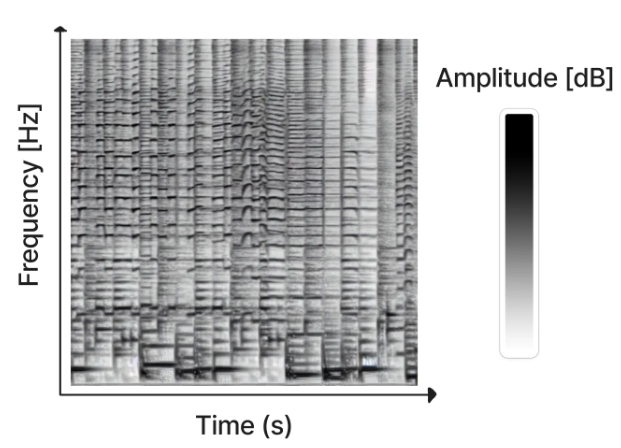

By using the stable diffusion model v1.5 without any modifications, just fine-tuned on images of spectrograms paired with text, the software RIFFUSION (RIFF + diffusion) generates incredibly interesting music from text input. By interpolating in latent space it is possible to transition from one text prompt to the next. You can try out the model here.

The authors provide source code on GitHub for an interactive web app and an inference server. A model checkpoint is available on Hugging Face.

There is a nice video about RIFFUSION by Alan Thompson on youtube.

Even more shocking than using diffusion on spectrograms and getting great results may be a paper by Google Research published on Dec 15, 2022. They use text as an image and train their model with contrastive loss alone, thus calling their model CLIP-Pixels Only (CLIPPO). It’s a joint model for processing images and text with a single ViT (Vison Transformer) approach and astonishing performance.

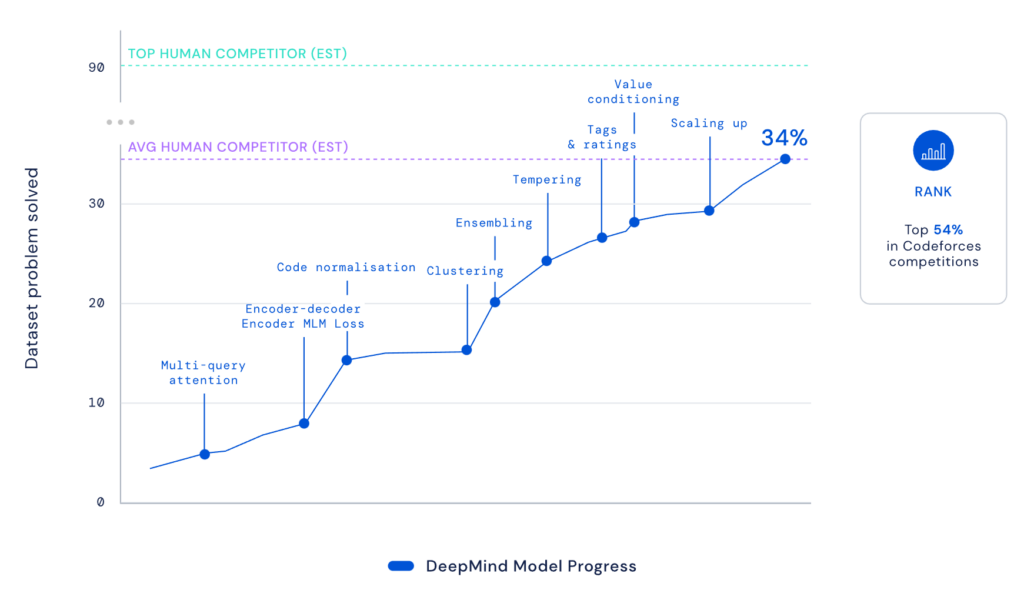

DeepMind publishes that AlphaCode reaches median human competitor performance in real-world programming competitions on Codeforces by now scaling up the number of possible solutions to the problem to millions instead of tens like before.

This announcement was originally made on 02 Feb 2022 and was now published on 08 Dec 2022 in Science.

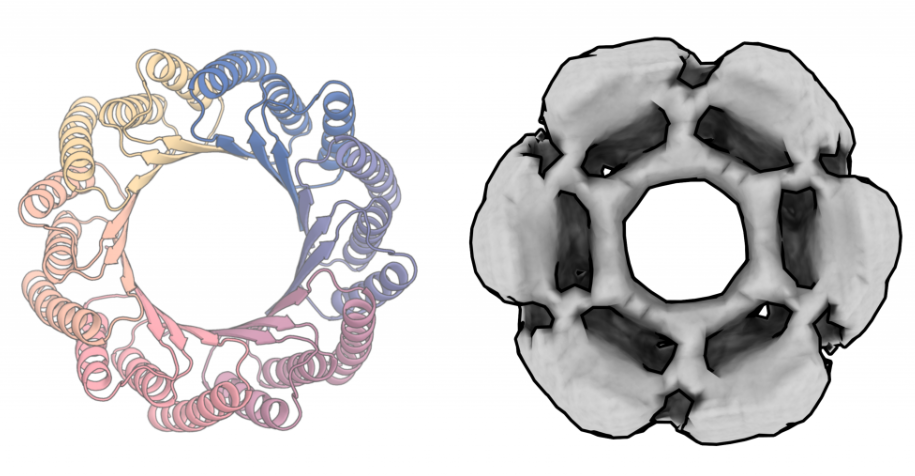

While ProteinMPNN takes a protein backbone (N-CA-C-O atoms, CA = C-Alpha) and finds an amino acid sequence that would fold to that backbone structure, RFdiffusion [Twitter] instead makes the protein backbone by just providing some geometrical and functional constraints like “create a molecule that binds X”.

The authors used a guided diffusion model for generating new proteins in the same way as Dall-E produces high-quality images that have never existed before by a diffusion technique.

See also this presentation by David Baker.

If I interpret this announcement correctly it means that drug design is now basically solved (or starts to get interesting depending on the viewpoint).

This technique can be expected to significantly increase the number of potential drugs for combating diseases. However, animal tests and human studies can also be expected as the bottlenecks of the new possibilities. Techniques like organ chips from companies like emulate may be a way out of this dilemma (before one-day entire cell, tissue, or whole body computational simulations become possible).

People start to realize what is possible with ChatGPT and there are already some great summaries available [1][2] . However, the currently most exciting prompt I have seen is to let ChatGPT answer like being a Linux terminal. This effectively opens up a complete virtual machine inside ChatGPT by predicting the proper text answer on user input. Doing this in a recursive way feels mind-blowing …

Lots of people are now experimenting with this idea.

More info about ChatGPT can be found in this video by Yannic Kilcher.

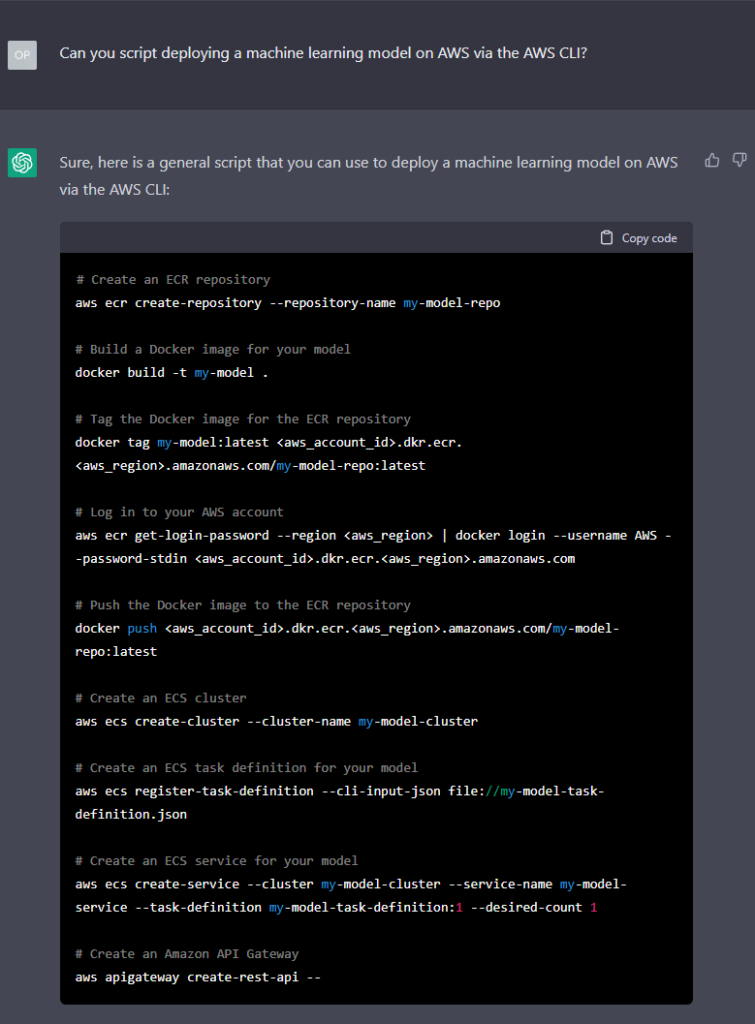

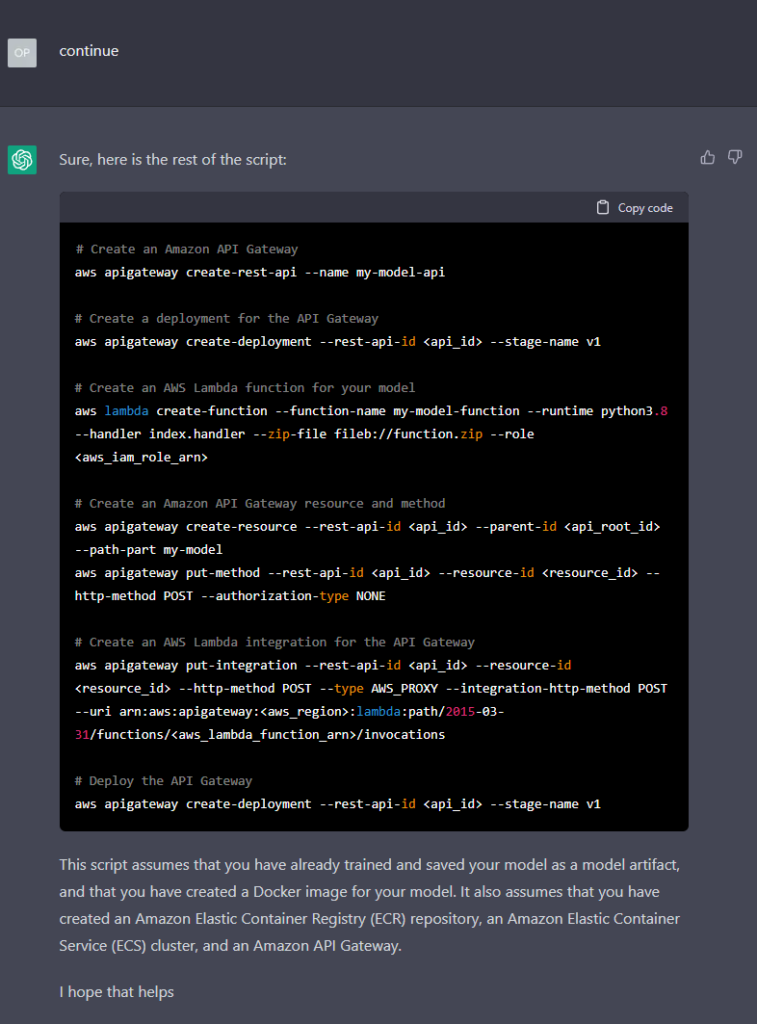

OpenAI released ChatGPT, an optimized language model for dialogue, for testing purposes. The model is trained using Reinforcement Learning from Human Feedback (RLHF).

First tests demonstrate an amazing performance.

Example:

© 2024 Stephan Seeger

Theme by Anders Noren — Up ↑